Access Google Sheets in Minutes w/ Python API

Managing Excel files can be a pain. Imagine you are working on an Excel sheet that you just downloaded from the Google Drive when all of a sudden, a teammate updates the excel file. Now you have to re-download the file all over again! If this has ever happened to you, or you just hate re-uploading and downloading excel files manually, then keep on reading. In this post we are going to learn how to activate the Google Sheets API so we can automate accessing and writing sheets directly from and to the cloud.

First ensure that you have a Google account. Google usually gives free credits, so you will not have to pay for accessing their resources for the first year. Once done, let’s activate the API.

Activating the API

In order to activate this service, we have to tell Google that our account will be using it. So let us google Google Cloud. Then on their website hit the console button. This is where we can see all our account resources. Then in the search bar search for Google Sheets API and then click enable. Once done, also enable the Google Drive API by searching for Google Drive API and repeating the same process.

Great! So now that we’re done activating the API lets set up some service accounts. We’ll then give these service accounts access to certain sheets. Afterwards we’ll write some Python code to access this data.

Creating a Service Account

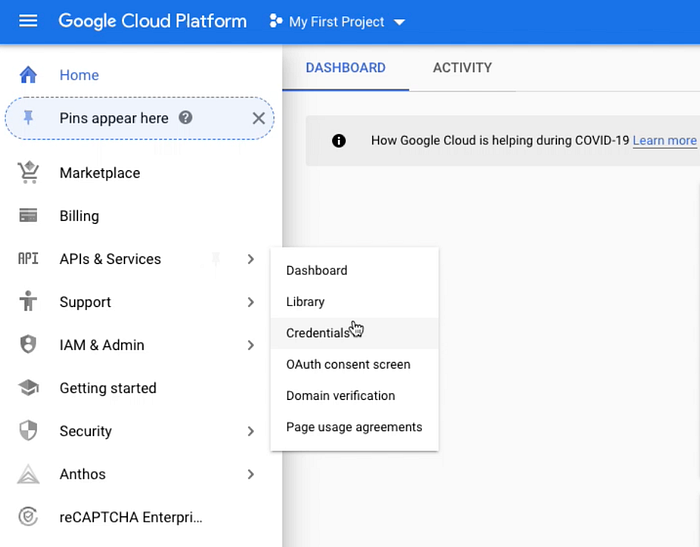

To create a Service Account we’ll have to go the credentials dashboard. Go to APIs & Services and then Credentials. There we will see page like the one below. Hit the Create Credentials button and then hit the Service Account button. Give it any name you want.

Once done naming, give the account editor rights (under Select Role > Project > Editor).

Great! Now we that created a Service Account, let’s finish up by creating a key. on the next page hit the Create Key button, select JSON, and then hit the Create button. This will download a json file to your computer. Now we can get coding.

Writing Code

Now that we have everything set up let’s write some python code. However, if you haven’t already, open up your command line and enter:

pip3 install gspread

pip3 install oauth2client.This will get us the required libraries for authentication into the google cloud and writing/reading from the google cloud.

Now in a .py file. I’ve named this file access.py. Within this file insert the following. Disclaimer: this code was created by Daniel E. Cook. All credit of this code goes to him: https://www.danielecook.com/from-pandas-to-google-sheets/

import gspread

import pandas as pd

from oauth2client.service_account import ServiceAccountCredentialsscope = ['https://spreadsheets.google.com/feeds','https://www.googleapis.com/auth/drive']

creds = ServiceAccountCredentials.from_json_keyfile_name('JSON FILE NAME HERE', scope)

client = gspread.authorize(creds)def iter_pd(df):

for val in df.columns:

yield val

for row in df.to_numpy():

for val in row:

if pd.isna(val):

yield ""

else:

yield valdef pandas_to_sheets(pandas_df, sheet, clear = True,formulas=[]):

# Updates all values in a workbook to match a pandas dataframe

if clear:

sheet.clear()

(row, col) = pandas_df.shape

cells = sheet.range("A1:{}".format(gspread.utils.rowcol_to_a1(row + 1, col)))

for cell, val in zip(cells, iter_pd(pandas_df)):cell.value = valsheet.update_cells(cells)

def newsheet(client,name):

client.add_worksheet(title=name, rows="100", cols="20")

Within the function call ServiceAccountCredentials.from_json_keyfile_name() enter the name of the json file that you downloaded earlier. iter_pd iterates through a pandas dataframe, it is called by pandas_to_sheets which writes to a specified google sheets file. The function newsheet creates a new sheet within an existing spreadsheet.

Accessing the Data

I’ve created a google sheet named “day”. This data is a bike sharing dataset usually used for Machine Learning experiments. You can download it here: https://archive.ics.uci.edu/ml/datasets/bike+sharing+dataset. In order for us to access the file we have to give access to our service account via it’s client-email. We can find this in the json file we downloaded earlier:

Copy the data between the quotation marks in the client_email field, then click share and give editor rights to that account.

Great! Now we can execute the following code:

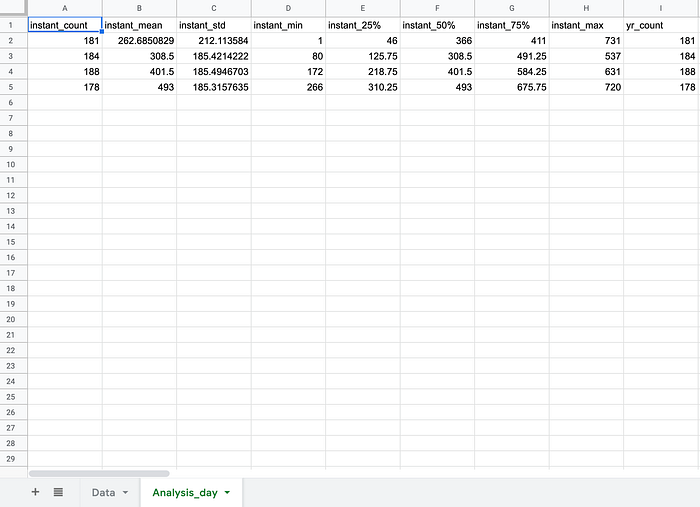

This will read the data into a DataFrame and then we will analyze the data by season. Then we will turn the columns into a single column, create a new sheet called Analysis_day and send the analysis back.

And we’re done! This comes in handy when you as a team are consistently working on one google spread sheet. When the database is updated, it can be analyzed almost instantaneously now!

This isn’t the only thing you can do with Google’s resources; there’s a lot more you can do with the Google Cloud platform. This includes accessing the Google maps API, AutoML and so much more.

Liked this article? Subscribe to me if you want get notifications when I post more related content: https://rich-gaogle.medium.com/subscribe

Not subscribed to Medium and want explore without limitations? Join now (Note this is a referral link and part of your membership will go to me if you join through this link): https://rich-gaogle.medium.com/membership

Want to learn how to integrate APIs with Google Sheets? Check that out here: https://rich-gaogle.medium.com/connect-your-apis-to-you-google-sheets-and-automate-them-to-run-every-week-eb029f497e88